do_rreg() runs the regularized classification model pipeline. It splits the

data into training and test sets, creates class-balanced case-control groups,

and fits the model. It also performs hyperparameter optimization, fits the best

model, tests it, and plots useful the feature variable importance.

Usage

do_rreg(

olink_data,

metadata,

variable = "Disease",

case,

control,

wide = TRUE,

strata = TRUE,

balance_groups = TRUE,

only_female = NULL,

only_male = NULL,

exclude_cols = "Sex",

ratio = 0.75,

type = "lasso",

cor_threshold = 0.9,

cv_sets = 5,

grid_size = 10,

ncores = 4,

hypopt_vis = TRUE,

palette = NULL,

vline = TRUE,

subtitle = c("accuracy", "sensitivity", "specificity", "auc", "features",

"top-features", "mixture"),

varimp_yaxis_names = FALSE,

nfeatures = 9,

points = TRUE,

boxplot_xaxis_names = FALSE,

seed = 123

)Arguments

- olink_data

Olink data.

- metadata

Metadata.

- variable

The variable to predict. Default is "Disease".

- case

The case group.

- control

The control groups.

- wide

Whether the data is wide format. Default is TRUE.

- strata

Whether to stratify the data. Default is TRUE.

- balance_groups

Whether to balance the groups. Default is TRUE.

- only_female

Vector of diseases that are female specific. Default is NULL.

- only_male

Vector of diseases that are male specific. Default is NULL.

- exclude_cols

Columns to exclude from the data before the model is tuned. Default is "Sex".

- ratio

Ratio of training data to test data. Default is 0.75.

- type

Type of regularization. Default is "lasso". Other options are "ridge" and "elnet".

- cor_threshold

Threshold of absolute correlation values. This will be used to remove the minimum number of features so that all their resulting absolute correlations are less than this value.

- cv_sets

Number of cross-validation sets. Default is 5.

- grid_size

Size of the hyperparameter optimization grid. Default is 10.

- ncores

Number of cores to use for parallel processing. Default is 4.

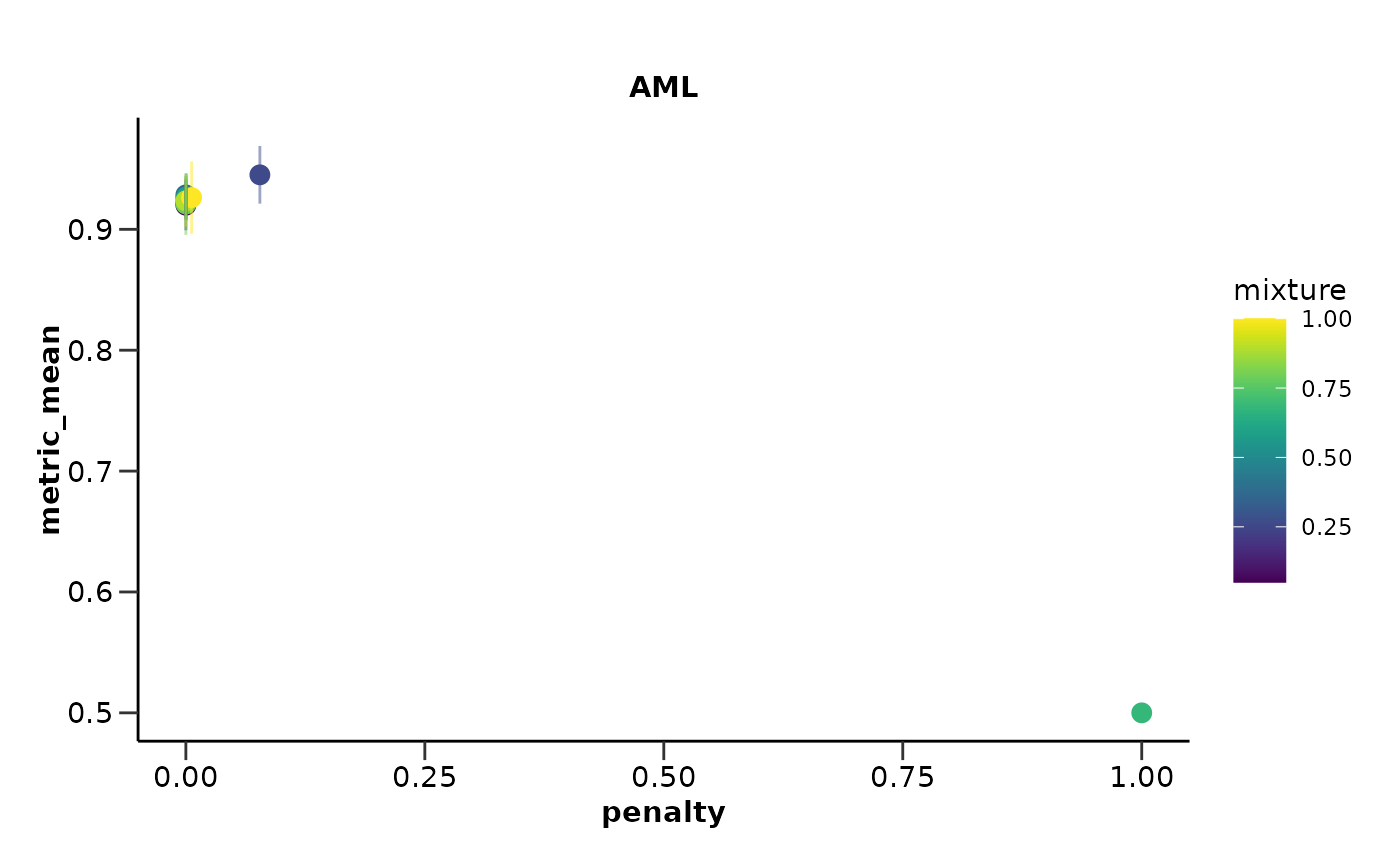

- hypopt_vis

Whether to visualize hyperparameter optimization results. Default is TRUE.

- palette

The color palette for the plot. If it is a character, it should be one of the palettes from

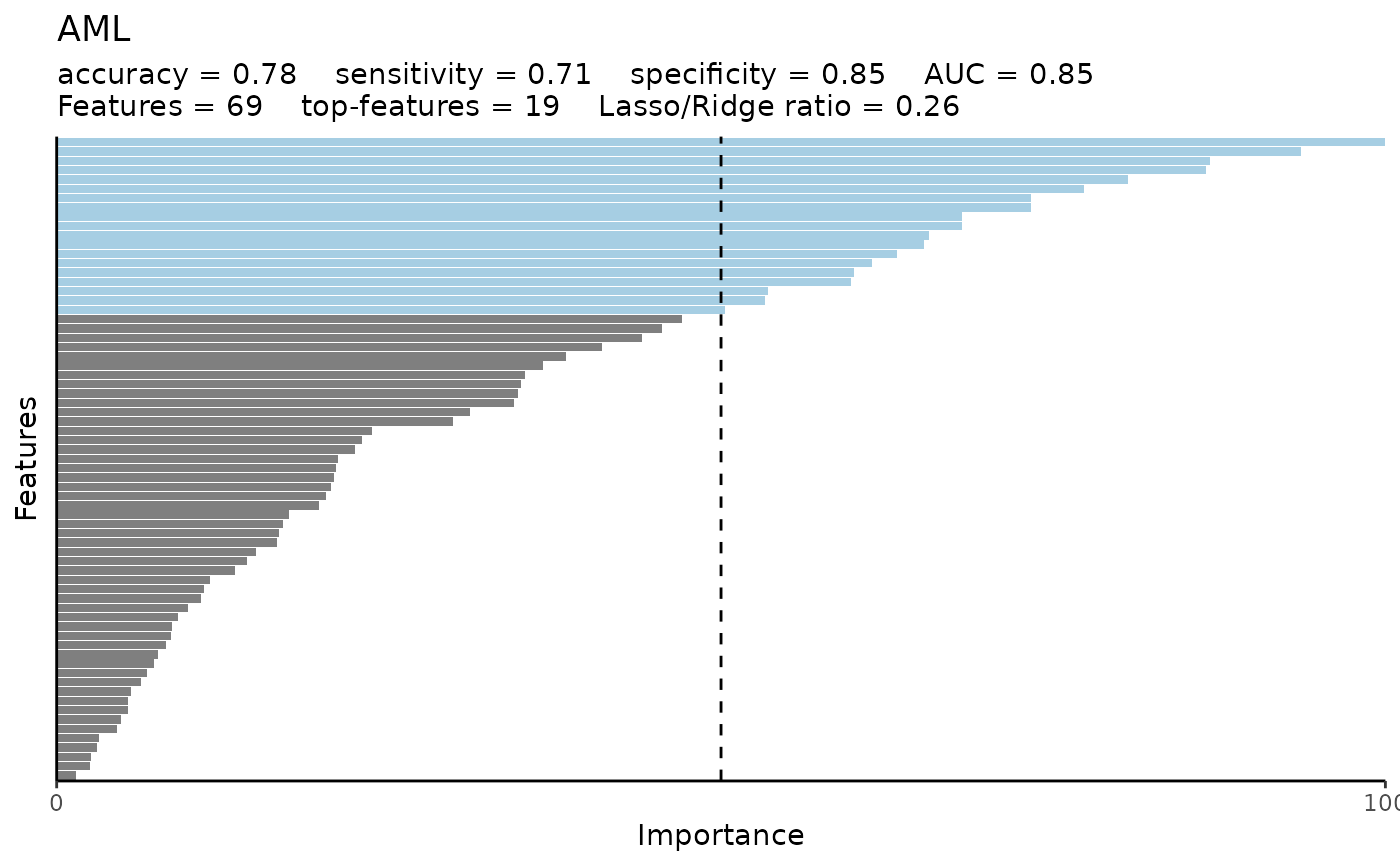

get_hpa_palettes(). Default is NULL.- vline

Whether to add a vertical line at 50% importance. Default is TRUE.

- subtitle

Vector of subtitle elements to include in the plot. Default is a list with all.

- varimp_yaxis_names

Whether to add y-axis names to the variable importance plot. Default is FALSE.

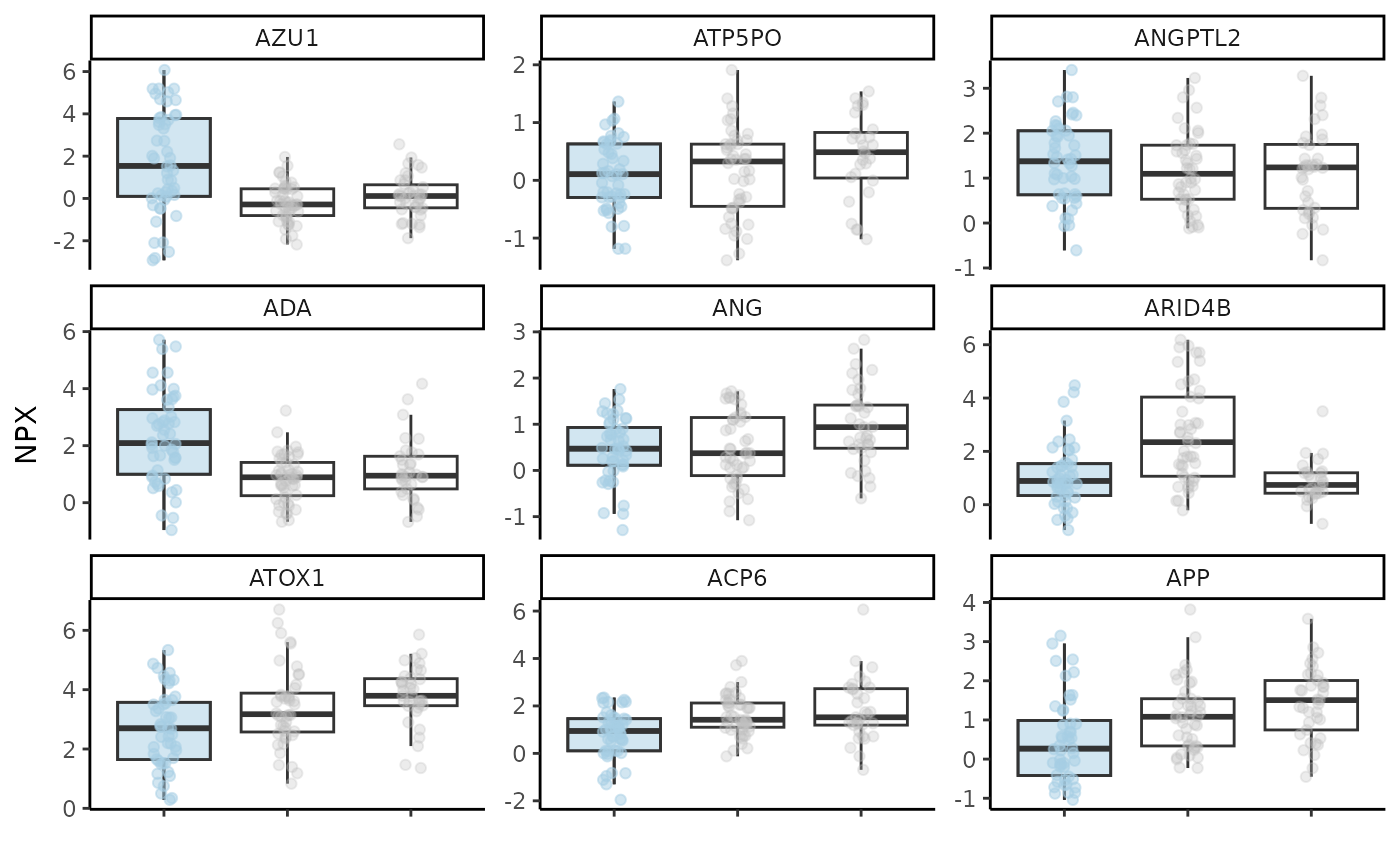

- nfeatures

Number of top features to include in the boxplot. Default is 9.

- points

Whether to add points to the boxplot. Default is TRUE.

- boxplot_xaxis_names

Whether to add x-axis names to the boxplot. Default is FALSE.

- seed

Seed for reproducibility. Default is 123.

Value

A list with results for each disease. The list contains:

hypopt_res: Hyperparameter optimization results.

finalfit_res: Final model fitting results.

testfit_res: Test model fitting results.

var_imp_res: Variable importance results.

Details

If the data contain missing values, KNN imputation will be applied.

If no check for feature correlation is preferred, set cor_threshold to 1.

Examples

do_rreg(example_data,

example_metadata,

case = "AML",

control = c("CLL", "MYEL"),

balance_groups = TRUE,

wide = FALSE,

type = "elnet",

palette = "cancers12",

cv_sets = 5,

grid_size = 10,

ncores = 1)

#> Joining with `by = join_by(DAid)`

#> Sets and groups are ready. Model fitting is starting...

#> Classification model for AML as case is starting...

#> $hypopt_res

#> $hypopt_res$elnet_tune

#> # Tuning results

#> # 5-fold cross-validation using stratification

#> # A tibble: 5 × 5

#> splits id .metrics .notes .predictions

#> <list> <chr> <list> <list> <list>

#> 1 <split [59/16]> Fold1 <tibble [10 × 6]> <tibble [0 × 3]> <tibble [160 × 7]>

#> 2 <split [59/16]> Fold2 <tibble [10 × 6]> <tibble [0 × 3]> <tibble [160 × 7]>

#> 3 <split [60/15]> Fold3 <tibble [10 × 6]> <tibble [0 × 3]> <tibble [150 × 7]>

#> 4 <split [61/14]> Fold4 <tibble [10 × 6]> <tibble [0 × 3]> <tibble [140 × 7]>

#> 5 <split [61/14]> Fold5 <tibble [10 × 6]> <tibble [0 × 3]> <tibble [140 × 7]>

#>

#> $hypopt_res$elnet_wf

#> ══ Workflow ════════════════════════════════════════════════════════════════════

#> Preprocessor: Recipe

#> Model: logistic_reg()

#>

#> ── Preprocessor ────────────────────────────────────────────────────────────────

#> 4 Recipe Steps

#>

#> • step_normalize()

#> • step_nzv()

#> • step_corr()

#> • step_impute_knn()

#>

#> ── Model ───────────────────────────────────────────────────────────────────────

#> Logistic Regression Model Specification (classification)

#>

#> Main Arguments:

#> penalty = tune::tune()

#> mixture = tune::tune()

#>

#> Computational engine: glmnet

#>

#>

#> $hypopt_res$train_set

#> # A tibble: 75 × 102

#> DAid AARSD1 ABL1 ACAA1 ACAN ACE2 ACOX1 ACP5 ACP6 ACTA2

#> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 DA00003 NA NA NA 0.989 NA 0.330 1.37 NA NA

#> 2 DA00004 3.41 3.38 1.69 NA 1.52 NA 0.841 0.582 1.70

#> 3 DA00005 5.01 5.05 0.128 0.401 -0.933 -0.584 0.0265 1.16 2.73

#> 4 DA00007 NA NA 3.96 0.682 3.14 2.62 1.47 2.25 2.01

#> 5 DA00008 2.78 0.812 -0.552 0.982 -0.101 -0.304 0.376 -0.826 1.52

#> 6 DA00009 4.39 3.34 -0.452 -0.868 0.395 1.71 1.49 -0.0285 0.200

#> 7 DA00010 1.83 1.21 -0.912 -1.04 -0.0918 -0.304 1.69 0.0920 2.04

#> 8 DA00011 3.48 4.96 3.50 -0.338 4.48 1.26 2.18 1.62 1.79

#> 9 DA00012 4.31 0.710 -1.44 -0.218 -0.469 -0.361 -0.0714 -1.30 2.86

#> 10 DA00013 1.31 2.52 1.11 0.997 4.56 -1.35 0.833 2.33 3.57

#> # ℹ 65 more rows

#> # ℹ 92 more variables: ACTN4 <dbl>, ACY1 <dbl>, ADA <dbl>, ADA2 <dbl>,

#> # ADAM15 <dbl>, ADAM23 <dbl>, ADAM8 <dbl>, ADAMTS13 <dbl>, ADAMTS15 <dbl>,

#> # ADAMTS16 <dbl>, ADAMTS8 <dbl>, ADCYAP1R1 <dbl>, ADGRE2 <dbl>, ADGRE5 <dbl>,

#> # ADGRG1 <dbl>, ADGRG2 <dbl>, ADH4 <dbl>, ADM <dbl>, AGER <dbl>, AGR2 <dbl>,

#> # AGR3 <dbl>, AGRN <dbl>, AGRP <dbl>, AGXT <dbl>, AHCY <dbl>, AHSP <dbl>,

#> # AIF1 <dbl>, AIFM1 <dbl>, AK1 <dbl>, AKR1B1 <dbl>, AKR1C4 <dbl>, …

#>

#> $hypopt_res$test_set

#> # A tibble: 27 × 102

#> DAid AARSD1 ABL1 ACAA1 ACAN ACE2 ACOX1 ACP5 ACP6 ACTA2

#> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 DA00001 3.39 2.76 1.71 0.0333 1.76 -0.919 1.54 2.15 2.81

#> 2 DA00002 1.42 1.25 -0.816 -0.459 0.826 -0.902 0.647 1.30 0.798

#> 3 DA00006 6.83 1.18 -1.74 -0.156 1.53 -0.721 0.620 0.527 0.772

#> 4 DA00016 1.79 1.36 0.106 -0.372 3.40 -1.19 1.77 1.07 2.00

#> 5 DA00022 7.07 5.67 3.68 -0.458 3.09 0.690 0.649 2.17 1.83

#> 6 DA00023 2.92 -0.0000706 0.602 1.59 0.198 1.61 0.283 2.35 2.11

#> 7 DA00034 3.45 2.91 1.31 0.423 0.647 1.40 0.691 0.720 1.95

#> 8 DA00035 4.39 3.31 0.454 0.290 2.68 0.116 -1.32 0.945 2.14

#> 9 DA00038 2.23 1.42 0.484 1.72 1.46 0.0747 1.82 0.109 4.27

#> 10 DA00039 4.26 0.572 -1.97 -0.433 0.208 0.790 -0.236 1.52 0.652

#> # ℹ 17 more rows

#> # ℹ 92 more variables: ACTN4 <dbl>, ACY1 <dbl>, ADA <dbl>, ADA2 <dbl>,

#> # ADAM15 <dbl>, ADAM23 <dbl>, ADAM8 <dbl>, ADAMTS13 <dbl>, ADAMTS15 <dbl>,

#> # ADAMTS16 <dbl>, ADAMTS8 <dbl>, ADCYAP1R1 <dbl>, ADGRE2 <dbl>, ADGRE5 <dbl>,

#> # ADGRG1 <dbl>, ADGRG2 <dbl>, ADH4 <dbl>, ADM <dbl>, AGER <dbl>, AGR2 <dbl>,

#> # AGR3 <dbl>, AGRN <dbl>, AGRP <dbl>, AGXT <dbl>, AHCY <dbl>, AHSP <dbl>,

#> # AIF1 <dbl>, AIFM1 <dbl>, AK1 <dbl>, AKR1B1 <dbl>, AKR1C4 <dbl>, …

#>

#> $hypopt_res$hypopt_vis

#>

#>

#> $finalfit_res

#> $finalfit_res$final

#> ══ Workflow [trained] ══════════════════════════════════════════════════════════

#> Preprocessor: Recipe

#> Model: logistic_reg()

#>

#> ── Preprocessor ────────────────────────────────────────────────────────────────

#> 4 Recipe Steps

#>

#> • step_normalize()

#> • step_nzv()

#> • step_corr()

#> • step_impute_knn()

#>

#> ── Model ───────────────────────────────────────────────────────────────────────

#>

#> Call: glmnet::glmnet(x = maybe_matrix(x), y = y, family = "binomial", alpha = ~0.261111111111111)

#>

#> Df %Dev Lambda

#> 1 0 0.00 0.97210

#> 2 3 0.87 0.92790

#> 3 3 1.92 0.88570

#> 4 3 2.95 0.84540

#> 5 3 3.96 0.80700

#> 6 4 5.16 0.77030

#> 7 4 6.42 0.73530

#> 8 5 7.66 0.70190

#> 9 8 9.15 0.67000

#> 10 10 11.01 0.63950

#> 11 11 12.97 0.61050

#> 12 12 14.94 0.58270

#> 13 12 16.86 0.55620

#> 14 14 18.80 0.53100

#> 15 14 20.69 0.50680

#> 16 14 22.51 0.48380

#> 17 14 24.27 0.46180

#> 18 15 25.98 0.44080

#> 19 15 27.67 0.42080

#> 20 16 29.31 0.40170

#> 21 18 30.99 0.38340

#> 22 19 32.65 0.36600

#> 23 20 34.29 0.34930

#> 24 20 35.94 0.33350

#> 25 22 37.56 0.31830

#> 26 22 39.18 0.30380

#> 27 24 40.80 0.29000

#> 28 24 42.39 0.27680

#> 29 25 43.95 0.26430

#> 30 26 45.47 0.25230

#> 31 27 46.96 0.24080

#> 32 27 48.40 0.22980

#> 33 28 49.80 0.21940

#> 34 30 51.17 0.20940

#> 35 32 52.53 0.19990

#> 36 35 53.90 0.19080

#> 37 35 55.28 0.18210

#> 38 35 56.62 0.17390

#> 39 36 57.91 0.16600

#> 40 36 59.17 0.15840

#> 41 37 60.38 0.15120

#> 42 37 61.56 0.14430

#> 43 37 62.70 0.13780

#> 44 38 63.82 0.13150

#> 45 40 64.91 0.12550

#> 46 40 66.01 0.11980

#>

#> ...

#> and 54 more lines.

#>

#> $finalfit_res$best

#> # A tibble: 1 × 2

#> penalty mixture

#> <dbl> <dbl>

#> 1 0.0774 0.261

#>

#> $finalfit_res$final_wf

#> ══ Workflow ════════════════════════════════════════════════════════════════════

#> Preprocessor: Recipe

#> Model: logistic_reg()

#>

#> ── Preprocessor ────────────────────────────────────────────────────────────────

#> 4 Recipe Steps

#>

#> • step_normalize()

#> • step_nzv()

#> • step_corr()

#> • step_impute_knn()

#>

#> ── Model ───────────────────────────────────────────────────────────────────────

#> Logistic Regression Model Specification (classification)

#>

#> Main Arguments:

#> penalty = 0.0774263682681128

#> mixture = 0.261111111111111

#>

#> Computational engine: glmnet

#>

#>

#>

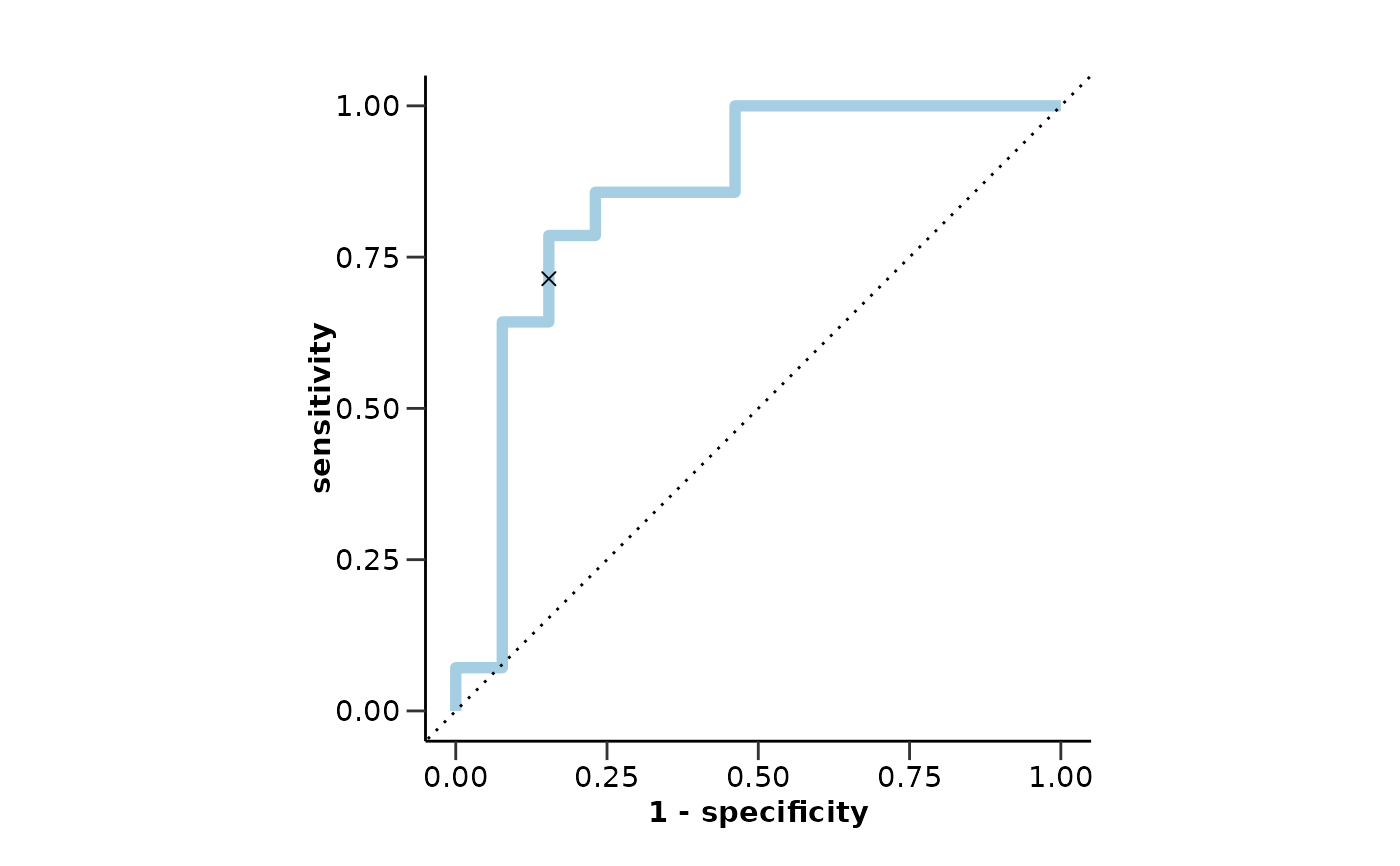

#> $testfit_res

#> $testfit_res$metrics

#> $testfit_res$metrics$accuracy

#> [1] 0.78

#>

#> $testfit_res$metrics$sensitivity

#> [1] 0.71

#>

#> $testfit_res$metrics$specificity

#> [1] 0.85

#>

#> $testfit_res$metrics$auc

#> [1] 0.85

#>

#> $testfit_res$metrics$conf_matrix

#> Truth

#> Prediction 0 1

#> 0 10 2

#> 1 4 11

#>

#> $testfit_res$metrics$roc_curve

#>

#>

#> $finalfit_res

#> $finalfit_res$final

#> ══ Workflow [trained] ══════════════════════════════════════════════════════════

#> Preprocessor: Recipe

#> Model: logistic_reg()

#>

#> ── Preprocessor ────────────────────────────────────────────────────────────────

#> 4 Recipe Steps

#>

#> • step_normalize()

#> • step_nzv()

#> • step_corr()

#> • step_impute_knn()

#>

#> ── Model ───────────────────────────────────────────────────────────────────────

#>

#> Call: glmnet::glmnet(x = maybe_matrix(x), y = y, family = "binomial", alpha = ~0.261111111111111)

#>

#> Df %Dev Lambda

#> 1 0 0.00 0.97210

#> 2 3 0.87 0.92790

#> 3 3 1.92 0.88570

#> 4 3 2.95 0.84540

#> 5 3 3.96 0.80700

#> 6 4 5.16 0.77030

#> 7 4 6.42 0.73530

#> 8 5 7.66 0.70190

#> 9 8 9.15 0.67000

#> 10 10 11.01 0.63950

#> 11 11 12.97 0.61050

#> 12 12 14.94 0.58270

#> 13 12 16.86 0.55620

#> 14 14 18.80 0.53100

#> 15 14 20.69 0.50680

#> 16 14 22.51 0.48380

#> 17 14 24.27 0.46180

#> 18 15 25.98 0.44080

#> 19 15 27.67 0.42080

#> 20 16 29.31 0.40170

#> 21 18 30.99 0.38340

#> 22 19 32.65 0.36600

#> 23 20 34.29 0.34930

#> 24 20 35.94 0.33350

#> 25 22 37.56 0.31830

#> 26 22 39.18 0.30380

#> 27 24 40.80 0.29000

#> 28 24 42.39 0.27680

#> 29 25 43.95 0.26430

#> 30 26 45.47 0.25230

#> 31 27 46.96 0.24080

#> 32 27 48.40 0.22980

#> 33 28 49.80 0.21940

#> 34 30 51.17 0.20940

#> 35 32 52.53 0.19990

#> 36 35 53.90 0.19080

#> 37 35 55.28 0.18210

#> 38 35 56.62 0.17390

#> 39 36 57.91 0.16600

#> 40 36 59.17 0.15840

#> 41 37 60.38 0.15120

#> 42 37 61.56 0.14430

#> 43 37 62.70 0.13780

#> 44 38 63.82 0.13150

#> 45 40 64.91 0.12550

#> 46 40 66.01 0.11980

#>

#> ...

#> and 54 more lines.

#>

#> $finalfit_res$best

#> # A tibble: 1 × 2

#> penalty mixture

#> <dbl> <dbl>

#> 1 0.0774 0.261

#>

#> $finalfit_res$final_wf

#> ══ Workflow ════════════════════════════════════════════════════════════════════

#> Preprocessor: Recipe

#> Model: logistic_reg()

#>

#> ── Preprocessor ────────────────────────────────────────────────────────────────

#> 4 Recipe Steps

#>

#> • step_normalize()

#> • step_nzv()

#> • step_corr()

#> • step_impute_knn()

#>

#> ── Model ───────────────────────────────────────────────────────────────────────

#> Logistic Regression Model Specification (classification)

#>

#> Main Arguments:

#> penalty = 0.0774263682681128

#> mixture = 0.261111111111111

#>

#> Computational engine: glmnet

#>

#>

#>

#> $testfit_res

#> $testfit_res$metrics

#> $testfit_res$metrics$accuracy

#> [1] 0.78

#>

#> $testfit_res$metrics$sensitivity

#> [1] 0.71

#>

#> $testfit_res$metrics$specificity

#> [1] 0.85

#>

#> $testfit_res$metrics$auc

#> [1] 0.85

#>

#> $testfit_res$metrics$conf_matrix

#> Truth

#> Prediction 0 1

#> 0 10 2

#> 1 4 11

#>

#> $testfit_res$metrics$roc_curve

#>

#>

#> $testfit_res$mixture

#> [1] 0.2611111

#>

#>

#> $var_imp_res

#> $var_imp_res$features

#> # A tibble: 69 × 4

#> Variable Importance Sign Scaled_Importance

#> <fct> <dbl> <chr> <dbl>

#> 1 AZU1 0.773 POS 100

#> 2 ATP5PO 0.724 NEG 93.6

#> 3 ANGPTL2 0.671 POS 86.8

#> 4 ADA 0.669 POS 86.5

#> 5 ANG 0.624 NEG 80.6

#> 6 ARID4B 0.598 NEG 77.3

#> 7 ATOX1 0.567 NEG 73.3

#> 8 ACP6 0.567 NEG 73.3

#> 9 APP 0.527 NEG 68.2

#> 10 ADAM8 0.527 NEG 68.1

#> # ℹ 59 more rows

#>

#> $var_imp_res$var_imp_plot

#>

#>

#> $testfit_res$mixture

#> [1] 0.2611111

#>

#>

#> $var_imp_res

#> $var_imp_res$features

#> # A tibble: 69 × 4

#> Variable Importance Sign Scaled_Importance

#> <fct> <dbl> <chr> <dbl>

#> 1 AZU1 0.773 POS 100

#> 2 ATP5PO 0.724 NEG 93.6

#> 3 ANGPTL2 0.671 POS 86.8

#> 4 ADA 0.669 POS 86.5

#> 5 ANG 0.624 NEG 80.6

#> 6 ARID4B 0.598 NEG 77.3

#> 7 ATOX1 0.567 NEG 73.3

#> 8 ACP6 0.567 NEG 73.3

#> 9 APP 0.527 NEG 68.2

#> 10 ADAM8 0.527 NEG 68.1

#> # ℹ 59 more rows

#>

#> $var_imp_res$var_imp_plot

#>

#>

#> $boxplot_res

#> Warning: Removed 69 rows containing non-finite outside the scale range

#> (`stat_boxplot()`).

#> Warning: Removed 13 rows containing non-finite outside the scale range

#> (`stat_boxplot()`).

#> Warning: Removed 56 rows containing missing values or values outside the scale range

#> (`geom_point()`).

#> Warning: Removed 13 rows containing missing values or values outside the scale range

#> (`geom_point()`).

#>

#>

#> $boxplot_res

#> Warning: Removed 69 rows containing non-finite outside the scale range

#> (`stat_boxplot()`).

#> Warning: Removed 13 rows containing non-finite outside the scale range

#> (`stat_boxplot()`).

#> Warning: Removed 56 rows containing missing values or values outside the scale range

#> (`geom_point()`).

#> Warning: Removed 13 rows containing missing values or values outside the scale range

#> (`geom_point()`).

#>

#>